best viewed with NetSurf

History of computer graphics Input and output (I/O)

Using computers requires some means by which information can be sent to and received from them (input and output). Teleprinters

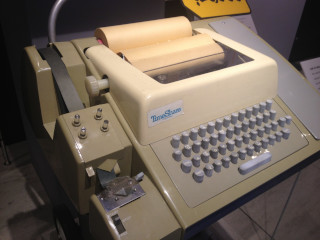

Computers used teleprinters for input and output from the early days of computing.

Punched card

![]() readers and fast printers replaced teleprinters for most purposes, but teleprinters continued

to be used as interactive time-sharing terminals until video displays became widely available in the late

1970s.

readers and fast printers replaced teleprinters for most purposes, but teleprinters continued

to be used as interactive time-sharing terminals until video displays became widely available in the late

1970s.

Hughes telegraph, an early teleprinter (1855)

Hughes telegraph, an early teleprinter (1855)

|

Teletype Model 33 ASR teleprinter, with punched tape reader and punch, usable as a

computer terminal

Teletype Model 33 ASR teleprinter, with punched tape reader and punch, usable as a

computer terminal

|

One of the earliest digital computers that could output results in real time

Link Trainer controls and instruments

Link Trainer controls and instruments

|

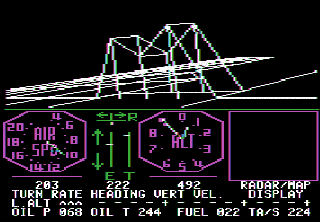

FS1 Flight Simulator (1979) for Apple II

FS1 Flight Simulator (1979) for Apple II

|

The first flight simulator for personal computers was FS1 Flight Simulator, developed by Bruce Artwick and first released for Apple II computers, which might explain why certain extraterrestrials chose this type of computer to control their spaceship.

Display technology

Electromechanical output devices were eventually replaced by electronic displays using various technologies, some of which are listed below.

-

1922: Cathode ray tube

A CRT works by electrically heating a tungsten coil which in turn heats a cathode in the rear of the CRT, causing it to emit electrons which are modulated and focused by electrodes. The electrons are steered by deflection coils or plates, and an anode accelerates them towards the phosphor-coated screen, which generates light when hit by the electrons.

-

1955: Nixie tube

During their heyday, Nixies were generally considered too expensive for use in mass-market consumer goods such as clocks.

[...]

In addition to the tube itself, another important consideration is the relatively high-voltage circuitry necessary to drive the tube.

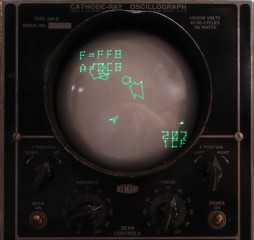

Cathode ray

Oscilloscope

screen, displaying image from a video game

Cathode ray

Oscilloscope

screen, displaying image from a video game

|

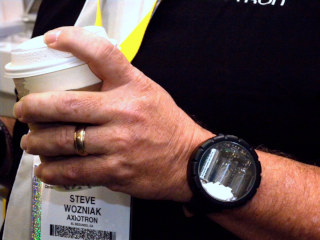

Nixie watch on the wrist of

Steve Wozniak

Nixie watch on the wrist of

Steve Wozniak

|

-

1964: Plasma display

, monochrome

, monochrome

-

1967: Vacuum fluorescent display (VFD)

-

1968: Light-emitting diode (LED)

Calculator with vacuum fluorescent display

Calculator with vacuum fluorescent display

|

Calculator with LED display

Calculator with LED display

|

Before the devices currently called monitors were developed, computers displayed some of their output and allowed the state of their internal registers to be monitored by means of front panels, which usually included indicator lamps or digit displays. These panels of lights became known as monitors. The first monitors that could display graphics were CRT vector monitors

A vector monitor[...] is a type of CRT, similar to that of an early oscilloscope. In a vector display, the image is composed of drawn lines rather than a grid of glowing pixels[...]. The electron beam follows an arbitrary path tracing the connected sloped lines, rather than following the same horizontal raster path for all images.

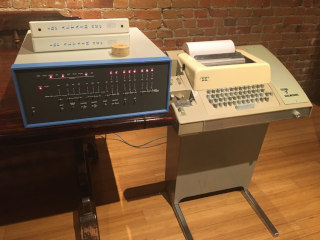

Altair 8800 computer (1975) with front panel and Model 33 ASR Teletype for I/O

Altair 8800 computer (1975) with front panel and Model 33 ASR Teletype for I/O

|

Vector monitor with

storage tube, a type of CRT

Vector monitor with

storage tube, a type of CRT

|

A CRT vector monitor would either have internal memory (which was very expensive at the time) for storing the parameters necessary to draw the image and refresh it many times a second, or use a storage tube

As can be seen, the Atari 800XL had a CPU which could function at a much higher clock speed than normal, when the computer was operated by the right person.

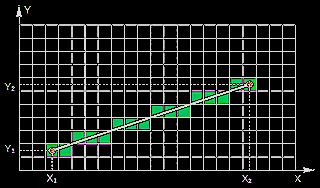

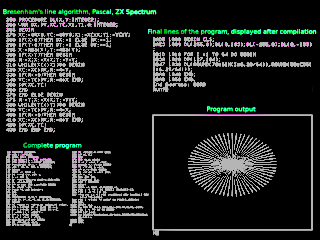

On raster displays sloped lines can not be drawn directly, so they have to be turned into series of pixels by means of algorithms such as Bresenham's line algorithm

Line drawn on raster display

Line drawn on raster display

|

Bresenham's line algorithm implemented in

HiSoft Pascal 4 for

ZX Spectrum

Bresenham's line algorithm implemented in

HiSoft Pascal 4 for

ZX Spectrum

|

In general, a raster display requires more memory for storing the image than a vector display. For instance, a monochrome display with a resolution of 256*256 pixels requires 256*256 = 65536 bits = 65536/8 = 8192 bytes = 8 KiB of memory, while a vector display with the same effective resolution, assuming that the coordinates of the 2 points at the end of each line are stored and each such coordinate requires 8 bits = 1 byte (28 = 256), requires 4*nL bytes, where nL is the number of lines drawn. Therefore, in these conditions, an image composed of less than 8192/4 = 2048 lines requires less memory if displayed on a vector display. However, with a vector display, which, unlike a raster display, does not have a fixed refresh rate, the computer can, in certain cases, just send the necessary parameters to the display directly as they are calculated in the program, in which case no special memory to store the image is needed at all. This approach was used in a video game console called Vectrex

The computer runs the game's computer code, watches the user's inputs, runs the sound

generator, and controls the vector generator to make the screen drawings. The vector generator is an

all-analog design using two integrators: X and Y. The computer sets the integration rates using a

digital-to-analog converter.

[...]

Rather than use sawtooth waves to direct the internal electron beam in a raster pattern,

computer-controlled integrators feed linear amplifiers to drive the deflection yoke.

Hyperchase (car racing game), Vectrex console

Hyperchase (car racing game), Vectrex console

|

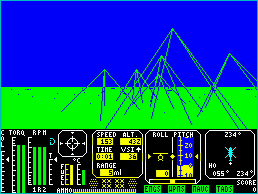

Tomahawk

(helicopter simulator), ZX Spectrum

Tomahawk

(helicopter simulator), ZX Spectrum

|

Graphics processing units and 3D graphics

The data for the pixels displayed on a raster display is usually stored in a segment of random-access memory (RAM), called framebuffer

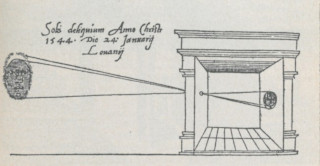

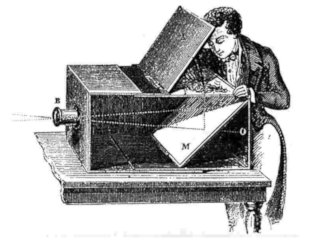

The expression 3D computer graphics refers to mathematically projecting 3D objects described by parameters stored in a computer's memory onto a 2D surface and then displaying the resulting image. In principle, this means photographing objects that don't exist with a camera that doesn't exist and then displaying the picture, resulted from calculations performed by a CPU or a GPU, on a computer screen. Photography is a method for creating images by recording light emitted or reflected by objects onto a photosensitive surface. The main optical device required in photography is called camera obscura and was initially used as an aid for drawing or painting, studying eclipses without the risk of damaging the eyes by looking directly into the sun etc.

Advanced GPUs

In 1985, the Commodore Amiga featured a custom graphics chip, with [...] a coprocessor with

its own simple instruction set, capable of manipulating graphics hardware registers[...]. In 1986,

Texas Instruments released the TMS34010, the first fully programmable graphics processor. It could run

general-purpose code, but it had a graphics-oriented instruction set.

[...]

In 1987, the IBM 8514 graphics system was released as one of the first video cards for IBM PC

compatibles to implement fixed-function 2D primitives in electronic hardware.

[...]

by 1995, all major PC graphics chip makers had added 2D acceleration support to their chips.

By this time, fixed-function Windows accelerators had surpassed expensive general-purpose

graphics coprocessors in Windows performance, and these coprocessors faded away from the PC market.

[...]

The term "GPU" was coined by Sony in reference to the 32-bit Sony GPU (designed by Toshiba) in the

PlayStation video game console, released in 1994.

In the PC world, notable failed first tries for low-cost 3D graphics chips were the S3 ViRGE,

ATI Rage, and Matrox Mystique. These chips were essentially previous-generation 2D accelerators with

3D features bolted on. [...] Initially, performance 3D graphics were possible only with discrete boards

dedicated to accelerating [fixed] 3D functions (and lacking 2D GUI acceleration entirely) such as

the PowerVR and the 3dfx Voodoo.

[...]

[In 2001,] Nvidia was first to produce a chip capable of programmable shading; the GeForce

3 (code named NV20).

[...]

With the introduction of the Nvidia GeForce 8 series, [...] GPUs became more generalized computing

devices. Today, parallel GPUs have begun making computational inroads against the CPU, and a subfield

of research, dubbed GPU Computing or GPGPU for General Purpose Computing on GPU, has found

its way into fields as diverse as machine learning, oil exploration, scientific image processing,

linear algebra, statistics,

The most common criticism of GTK is the lack of backward-compatibility in major updates, most notably in the application programming interface and theming.

Fortunately, many programs (including advanced 3D renderers and even really good 3D games) that don't require specialised GPUs or graphics libraries are still developed.Final considerations

Flight simulator from 2020

Flight simulator from 2020

|

Flight simulator from 1989

Flight simulator from 1989

|

Before deciding which one of the 2 screenshots above looks better, you might care to notice that the instrument panel is a lot more readable in the second image, displayed by the simulator from 1989, which runs perfectly on a 286 CPU and does not use a 3D accelerator, and since computer games are made for the purpose of entertainment, you might also consider some other aspects, such as:

Photograph of a street with houses

Photograph of a street with houses

|

Painting of another street with houses - inferior to the photograph at the left,

because it doesn't have the same amount of detail(?)

Painting of another street with houses - inferior to the photograph at the left,

because it doesn't have the same amount of detail(?)

|

Besides, the Link Trainer

Link's first military sales came as a result of the Air Mail scandal, when the Army Air Corps took over carriage of U.S. Air Mail. Twelve pilots were killed in a 78-day period due to their unfamiliarity with Instrument Flying Conditions. [...] The Air Corps was given a stark demonstration of the potential of instrument training when, in 1934, Link flew in to a meeting in conditions of fog that the Air Corps evaluation team regarded as unflyable.

Therefore, people interested in the newest CPUs or video cards because they want to play the most realistically looking games might want to consider some of the aspects presented here, not to mention the disaster caused by electronic waste